Is AI the End of Data Science? Top 5 Ways to use AI for Data Science & Analytics

We can all benefit from carving out the right role for AI in our daily work. Below I share top 5 ways to use AI for Data Science & Analytics.

The question of how AI is impacting data science is top of mind for everyone in the field. As someone that’s been working in the field for the last 15 years, I have seen many shifts take place. My view is that AI isn’t the “end of data science”, and we can all benefit from carving out the right role for AI in our daily work.

Below I share top 5 ways to use AI for Data Science & Analytics – I plan to post a YouTube video that will go in more detail on this topic soon – so watch that space! I'll link a playlist at the end of the article for quick access!

I’ve listed these ideas in order of “basic”/ “standard” use cases to more “complex” use cases in terms of what we are asking AI to do relative to the strengths of AI and how long it might take to refine our prompts.

1. Using AI to Check our Code/ Scripts for Troubleshooting

As data scientists and analysts, we spend a fair amount of time writing code in order to execute our projects. This code covers pulling data, cleaning/ processing data, analyzing data, outputting results for our deliverables as well as deploying and maintaining deployments.

Everyone has different approaches to coding that is heavily influenced by their background and how they came to start coding. Even still, there are largely two methods: writing code from scratch or adapting/ modifying existing code.

We all do some combination of these two approaches for coding tasks we are experienced with as well as brand new coding tasks, and that has direct relationship to how many errors are made, how much troubleshooting is required, and how long it takes us to complete various coding tasks.

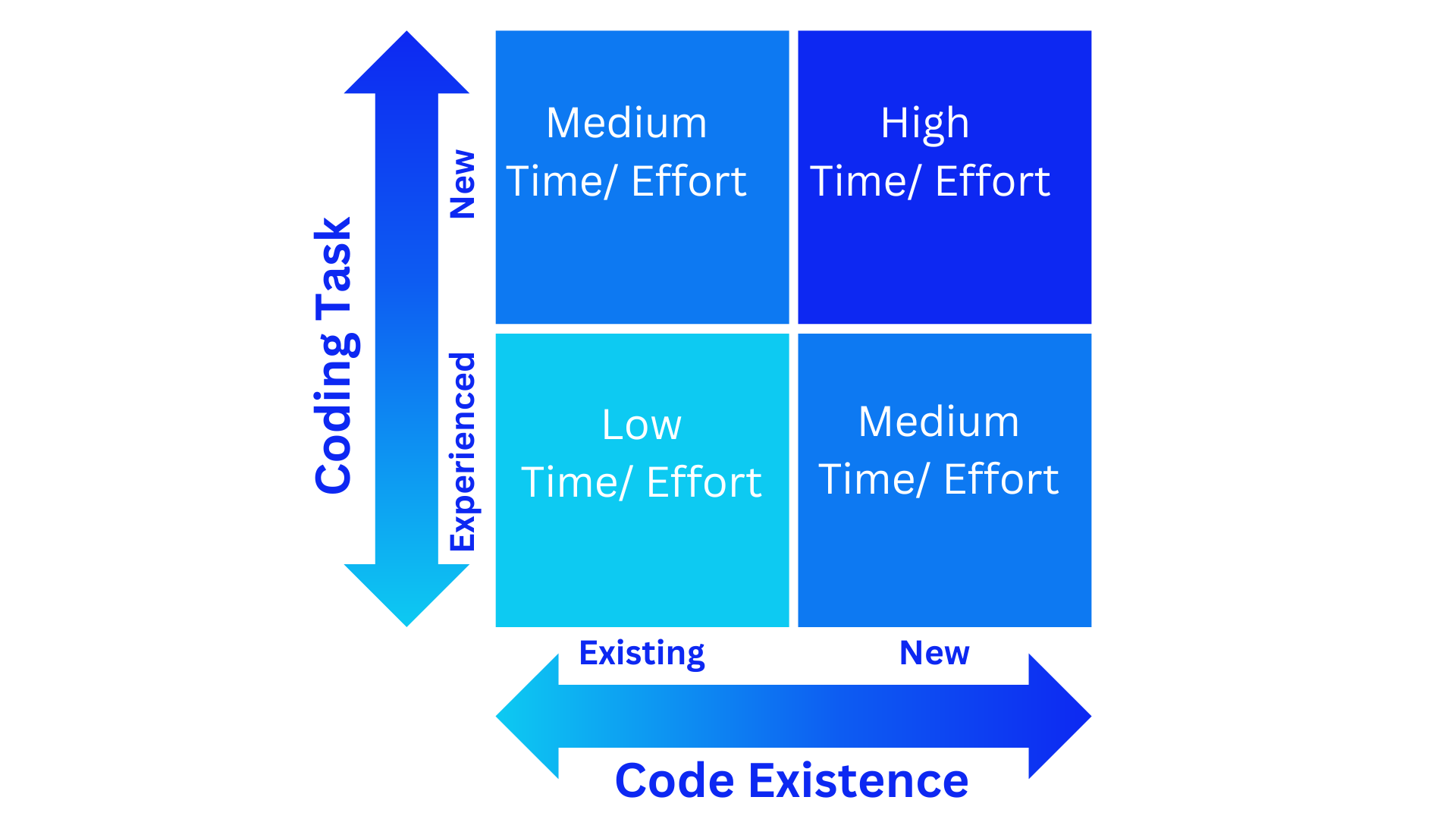

This matrix below illustrates these two factors of coding tasks and code status as it relates to time and effort needed.

New Task v. Experienced Task. New Code or Modifying Existing Code

If we are thinking of ways where AI can provide the most benefit for our daily coding work, it would be in that top right corner of “high” time and effort needed for a new coding task we aren’t experienced with that is also requiring brand new code (no existing code to adapt). We can leverage AI to quickly troubleshoot and work as a tutor of sorts to quickly iterate through bugs, solutions, suggestions to get our code in working order.

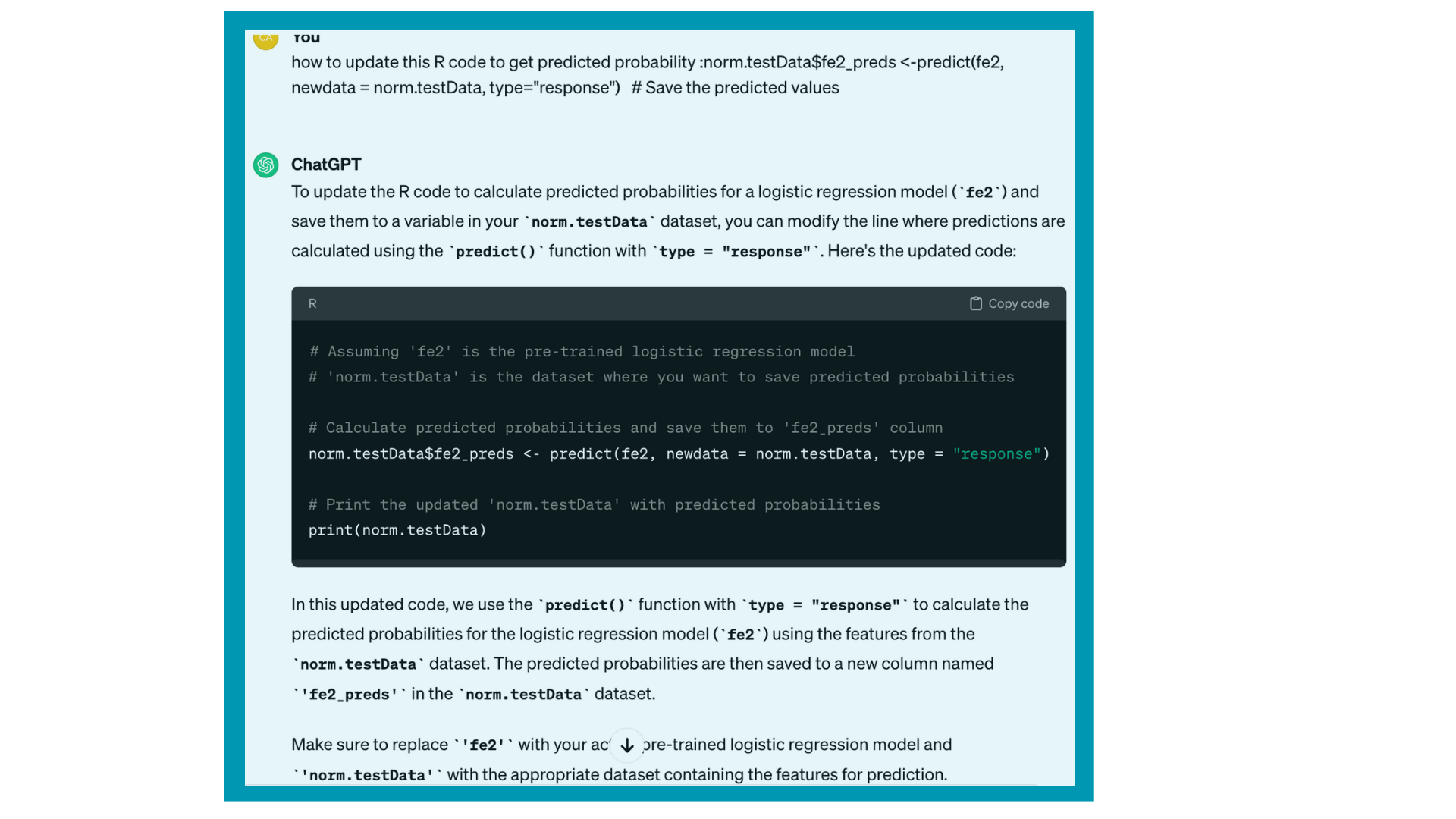

Below is an example of using ChatGPT to help quickly modify some existing code for a new use case (a "medium" time/effort example).

However, given the “newness” of both the task and the code, I would strongly recommend developing some QA review guidelines related to assessing the outputs and validating our code developed with AI assistance is in fact working as expected.

For both “medium” boxes – since we have some familiarity with the code or the task, we can still leverage AI and perhaps be lighter on the QA requirements for assessing output relative to the “high” box.

For the “low” box – if you’re adapting existing code for a coding task you’re experienced in, AI may actually slow you down if you already know what you want to do, and you have to spend extra time to QA review the AI assistance/ suggestions.

2. Using AI for Annotating Code/ Scripts

Another great use case for incorporating to improve efficiency of our daily data science and analytics work is to use it to annotate our code to add explanations as part of documentation.

I’m a big proponent of annotation and documentation in general – so many hours are wasted sorting through someone’s code or even our own older code – trying to piece together what the intention of various code blocks was. Far too frequently, we can’t figure it out and just rewrite from scratch – wasting time both in the useless review as well as the re-do from scratch.

But the reason we so often find ourselves in that situation is because we’re all working as fast as we can to meet deadlines, and it’s such a pain and “time-suckage” to document along the way or to go back afterwards and document. It requires a lot of discipline and even still, it is hard to maintain even on the best of data science teams.

AI can help with adding annotation to finalized code/ scripts – you can paste your code and ask it to add annotation that explains code chunks. You still need to review the output – particularly if this is a client deliverable – but it is way better than skipping documentation all together and ending up with very little to work from when we need to replicate or re-visit the code.

3. Using AI to Brainstorm Data Metrics

My favorite and the easiest way to use AI for our daily data science and analytics work is to help brainstorm data metrics for our project. This can be a huge time-saver as we can spend a lot of time just trying to generate our initial data pull requests – particularly at large complex organizations when no one really knows what is available and if it is worth tracking down different data metrics.

Brainstorm sessions are always helpful but it is sometimes hard to gather folks and they also come with various levels of background/ context. That is helpful to do as well because then they come up with interesting ideas outside of what you would.

However, AI is a good addition to quickly help you brainstorm ideas for data metrics for different data concepts. It can really cut down your research time and help you quickly develop a data request list that is more likely to yield something useful for your project.

For additional thoughts on brainstorming data metrics, you may be interested in this YouTube video I made on how to choose the best data for your project.

4. Using AI for Easy Translation for Non-Technical Audiences

Another use case I love for leveraging AI for our daily data science and analytics work is to generate easy translations for non-technical audiences.

As analysts and data scientists, we are often collaborating with stakeholders and presenting our work to audiences with varying technical backgrounds. The best way to shut down collaboration and turn off your audience is to throw out a lot of technical jargon that is essentially useless for helping them understand your project or your results.

As such, a key factor in the success of any data scientist or analyst is our ability to translate the technical work we’re doing into easy-to-understand phrases that increase collaboration as well as understanding of our projects and results. The time investment to develop this type of language, phrasing, and descriptions is significant. It’s often something that gets skipped over when time is short – leading to subpar results across the board for the project as well as your career trajectory.

This is where AI can easily help – similar to the annotation idea above – to help translate technical concepts into easy-to-understand chunks. You can paste in your goal and your attempt, and then use different prompts at different levels of understanding to see how it differs from your initial draft. This can help you quickly develop these phrases and talking points which will help take your presentations and career to a different level!

Example: Asking ChatGPT to explain AUC

5. Using AI to Refine Methodology / Research Design

And the last way to leverage AI for our daily data science and analytics tasks is to help us refine our methodology/ research design.

For all of our projects, we have a phase of choosing our modeling and analytic approach. Part of that process is assessing the requirements as well as pros/ cons of each model/ analytics approach we are considering relative to our project needs – the data we’re using, the outputs we need, and the deliverable use case.

This is actually a weak area for a lot of projects – where people just jump to a default of what is familiar without making these assessments – which often leads to model performance issues down the line or analytics insights that turn out to not be impactful because the methodology was faulty.

This is a great place where AI can help us – we can ask prompts that help with mapping the right model/ analytics approach to our project specifics in terms of data, outputs, and deliverable use case. We can quickly refresh on requirements of different models, assess pros/ cons much faster than if we’re just going by memory or random googling.

For additional thoughts on choosing models for analysis, you may be interested in this YouTube video I made on how to choose the best models and analytics approaches for your project.

That’s it for now – hope you find these tips useful!

-Dr. Christy